内容简介:Less than 50 days after the release YOLOv4, YOLOv5 improves state-of-the-art for realtime object detection.Realtime object detection is improving quickly. TheOn March 18, Google

Less than 50 days after the release YOLOv4, YOLOv5 improves state-of-the-art for realtime object detection.

Realtime object detection is improving quickly. The rate of improvement is improving even more quickly. The results are stunning.

On March 18, Google open sourced their implementation ofEfficientDet, a fast-training model with various sizes, one of which offers realtime output. On April 23, Alexey Bochoviskiy et al. open sourced YOLOv4. On June 9, Glenn Jocher open sourced an implementation ofYOLOv5.

Just Looking to Train YOLOv5?

Skip this info post and jump straight to ourYOLOv5 tutorial. You'll have a trained YOLOv5 model on your custom data in minutes.

The Evolution of YOLO Models

YOLO (You Only Look Once) is a family of models that PJ Reddie originally coined with a 2016 publication . YOLO models are infamous for being highly performant yet incredibly small – making them ideal candidates for realtime conditions and on-device deployment environments.

PJ Reddie's research team is responsible for subsequently introducing YOLOv2 andYOLOv3, both of which made continued improvement in both model performance and model speed. In February 2020, PJ Reddie noted he would discontinue research in computer vision.

In April 2020, Alexey Bochkovskiy, Chien-Yao Wang, and Hong-Yuan Mark Liao introducedYOLOv4, demonstrating impressive gains.

Notably, many of YOLOv4's improvements came from improveddata augmentation as much as model architecture. (We've written abreakdown on YOLOv4 as well as how to train a YOLOv4 model on custom objects .)

YOLOv5: The Leader in Realtime Object Detection

Glenn Jocher releasedYOLOv5 with a number of differences and improvements. (Notably, Glenn is the creator of mosaic augmentation, which is an included technique in what improved YOLOv4.) The release of YOLOv5 includes five different models sizes: YOLOv5s (smallest), YOLOv5m, YOLOv5l, YOLOv5x (largest).

Let's breakdown YOLOv5. How does YOLOv5 compare?

First, this is the first native release of models in the YOLO family to be written in PyTorch first rather than PJ Reddie's Darknet. Darknet is an incredibly flexible research framework, but it is not built with production environments in mind. It has a smaller community of users. Taken together, this results in Darknet being more challenging to configure and less production-ready.

Because YOLOv5 is implemented in PyTorch initially, it benefits from the established PyTorch ecosystem: support is simpler, and deployment is easier. Moreover as a more widely known research framework, iterating on YOLOv5 may be easier for the broader research community. This also makes deploying to mobile devices simpler as the model can be compiled to ONNX and CoreML with ease.

Second, YOLOv5 is fast – blazingly fast. In a YOLOv5 Colab notebook , running a Tesla P100, we saw inference times up to 0.007 seconds per image, meaning 140 frames per second (FPS) ! By contrast, YOLOv4 achieved 50 FPS after having been converted to the same Ultralytics PyTorch library.

Third, YOLOv5 is accurate. In our tests on the blood cell count and detection (BCCD) dataset , we achieved roughly 0.895 mean average precision (mAP) after training for just 100 epochs. Admittedly, we saw comparable performance from EfficientDet and YOLOv4, but it is rare to see such across-the-board performance improvements without any loss in accuracy.

Fourth, YOLOv5 is small. Specifically, a weights file for YOLOv5 is 27 megabytes. Our weights file for YOLOv4 (with Darknet architecture) is 244 megabytes. YOLOv5 is nearly 90 percent smaller than YOLOv4. This means YOLOv5 can be deployed to embedded devices much more easily.

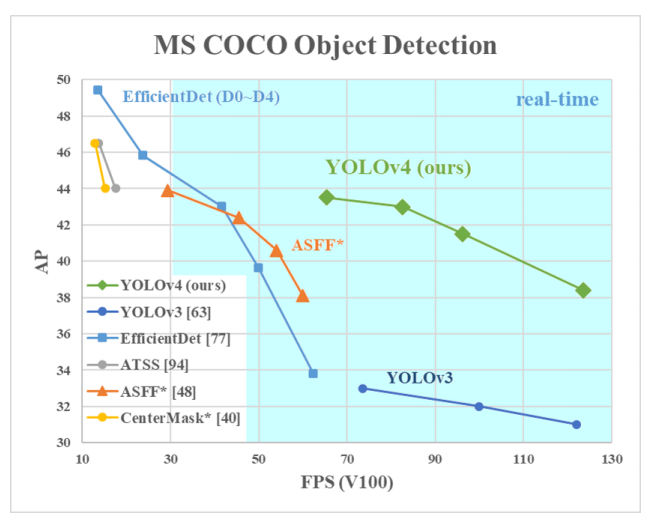

Many of these changes are well-summarized in YOLOv5's graphic measuring performance.

Get Started with YOLOv5

We're eager to see what you are able to build with new state-of-the-art detectors.

To that end, we've published a guide on how to train YOLOv5 on a custom dataset , making it quick and easy. If you would like to use standard COCO weights, see this notebook .

You can always visit YOLOv5.com for more resources as they become available.

Stay tuned for additional deeper dives on YOLOv5, and good luck building!

Want to be the first to know about new computer vision tutorials and content like our synthetic dataset creation guide ? Subscribe to our updates :mailbox_with_mail: .

Roboflow accelerates your computer vision workflow through automated annotation quality assurance, universal annotation format conversion (like PASCAL VOC XML to COCO JSON andcreating TFRecords ), team sharing and versioning, and easy integration with popular open source computer vision models . Getting started with your first 1000 images are completely free.

以上就是本文的全部内容,希望本文的内容对大家的学习或者工作能带来一定的帮助,也希望大家多多支持 码农网

猜你喜欢:本站部分资源来源于网络,本站转载出于传递更多信息之目的,版权归原作者或者来源机构所有,如转载稿涉及版权问题,请联系我们。

删除

[英] 维克托•迈尔-舍恩伯格(Viktor Mayer-Schönberger)著 / 袁杰 译 / 浙江人民出版社 / 2013-1 / 49.90元

《删除》讲述了遗忘的美德,为读者展现了大数据时代的取舍之道。 《删除》从大数据时代信息取舍的目的和方法分别诠释了“被遗忘的权利”。维克托首先回溯了人类追寻记忆的过程,之后提出数字技术与全球网络正在瓦解我们天生的遗忘能力。对此,他考察了促进遗忘终止4大驱动力——数字化,廉价的存储器,易于提取,全球性访问。之后,他提出了当前数字化记忆的两大威胁——信息权力与时间,并给出了应对威胁的6大对策——数......一起来看看 《删除》 这本书的介绍吧!